🚀 CloudSEK becomes first Indian origin cybersecurity company to receive investment from US state fund

Read more

AI security means protecting AI systems from attacks while also using AI to strengthen an organization’s cybersecurity. It keeps the data, models, and decision processes inside these systems robust, private during inference, and resistant to manipulation.

Threats such as adversarial inputs, data poisoning, model inversion, and model extraction target how machine-learning models learn and respond to information. These attacks exploit gaps in training data or inference behavior that traditional security tools are not built to detect.

As AI becomes part of critical operations, securing these systems is essential for reliable and safe decision-making. At the same time, AI gives cybersecurity teams earlier insight into suspicious activity, helping them detect threats faster and act with greater confidence.

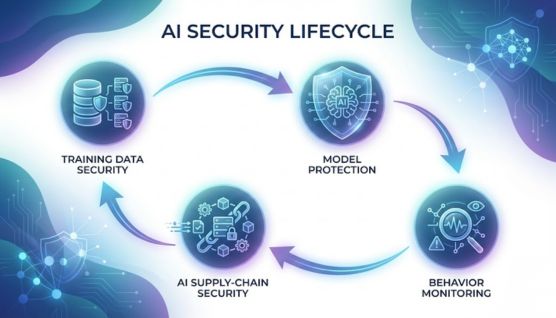

AI security works by protecting the data, models, and operational pipelines that power artificial intelligence systems throughout their lifecycle.

Training data needs to be checked for accuracy, provenance, and signs of tampering before it shapes how a model learns. This reduces the chances of poisoning attacks and helps maintain the integrity of the system’s underlying knowledge.

Models are secured through access controls, encryption, rate limits, and other safeguards that prevent extraction or inversion attempts. These protections help keep the model’s logic confidential and reduce the risk of attackers influencing its outputs.

Ongoing monitoring detects drift, strange inputs, or unexpected decisions that may signal a compromise. This visibility lets security teams respond quickly when a model starts behaving outside its normal patterns.

AI supply-chain security focuses on verifying the datasets, model components, and third-party tools used to build and run an AI system. It helps teams catch poisoned data, unsafe dependencies, or compromised libraries before they influence how the model performs in production.

AI enhances cybersecurity by detecting threats, analyzing behaviors, and identifying malicious patterns that traditional tools may overlook.

AI strengthens platforms like SIEM and EDR by correlating large volumes of security events to uncover hidden or emerging threats. These models identify early warning signs such as coordinated probing activity or unusual system behavior that may signal an attack in progress.

Machine-learning systems study how users and devices typically operate to highlight actions that fall outside normal behavioral baselines. This helps uncover subtle risks such as unusual login attempts, abnormal data access patterns, or deviations that could indicate compromised credentials.

AI analyzes communication signals, content patterns, and user interactions to surface phishing attempts, impersonation campaigns, and other forms of digital fraud. These models also detect indicators of data theft and social engineering schemes by learning how malicious actors adapt their tactics over time.

AI helps prevent cyber attacks by spotting early signs of malicious activity, accelerating detection, and automating defensive actions across digital environments.

Criminals use AI to scale attacks, improve deception, and automate techniques that would otherwise take significant time and expertise to execute.

Attackers use AI-generated videos and voice cloning to impersonate executives, employees, or customers during high-value fraud attempts. These deepfakes make social engineering schemes more convincing and harder for targets to identify.

Threat actors rely on AI models to produce new malware variants that evade signature-based detection and adapt to defensive controls. These tools help attackers refine payloads, automate code generation, and identify weaknesses in targeted systems.

AI tools craft highly personalized phishing messages and orchestrate automated chat, email, or voice interactions that mimic human communication. These models generate realistic content, adjust tone dynamically, and guide victims toward revealing credentials or sensitive information.

AI systems face specialized threats that target their data, models, and decision logic in ways traditional security measures are not designed to detect.

Attackers modify inputs in subtle ways to trick AI models into making incorrect classifications or unsafe decisions. These manipulations exploit the model’s sensitivity to small perturbations that appear normal to human observers.

Poisoning attacks involve inserting malicious or misleading samples into training datasets to distort how a model learns. These changes can remain hidden until the model is deployed, resulting in biased or harmful outputs.

Model inversion enables attackers to reconstruct sensitive information used during training by probing the model’s outputs. This exposes private data and undermines confidentiality even when the model appears secure.

Extraction attacks replicate a model’s logic or decision boundaries by repeatedly querying it and analyzing the results. This allows adversaries to steal intellectual property or deploy the cloned model for malicious purposes.

Prompt injection manipulates large language models by feeding crafted instructions that override or redirect their intended behavior. This leads to unintended outputs and creates opportunities for misuse or data exposure.

Jailbreaking techniques exploit weaknesses in model safeguards to bypass safety filters and enable restricted actions or responses. These attacks undermine the integrity of guardrails that protect against harmful or sensitive outputs.

AI security matters because organizations now rely on machine-learning systems to make decisions and automate tasks that must remain accurate and trustworthy.

AI is becoming deeply embedded in core operations, so securing these systems is essential to maintain reliability, protect data, and reduce the impact of potential disruptions.

Several well-known frameworks offer practical guidance for understanding AI risks, applying the right controls, and keeping systems accountable as they evolve.

The NIST AI Risk Management Framework helps teams evaluate how an AI system behaves and where it may be exposed to risk. It provides a structured way to improve trustworthiness through better measurement, monitoring, and governance.

ISO/IEC 42001 outlines how organizations should manage AI operations through clear processes, documentation, and oversight. It supports consistent, responsible deployment by aligning teams around shared standards and controls.

The EU AI Act sets rules based on the level of risk an AI system presents and defines what safeguards must be in place. It emphasizes transparency, data protection, and ongoing monitoring for applications used in sensitive or high-impact areas.

The OWASP Top 10 for LLMs highlights the most frequent security issues seen in large language model applications, such as prompt manipulation and insecure output handling. It gives practitioners a clear checklist for spotting vulnerabilities and strengthening generative AI systems.

Google’s Secure AI Framework encourages teams to build AI models with strong security fundamentals, from input validation to continuous monitoring. It treats AI as part of the broader security ecosystem and promotes protective practices across data, models, and infrastructure.

Strong AI security comes from combining the right technical controls with clear oversight and consistent monitoring throughout a model’s lifecycle.

Choosing an AI security solution means evaluating how well it supports real-world operations, adapts to evolving risks, and integrates into your existing security environment.

AI models rely on patterns in data, which makes them susceptible to small manipulations crafted to influence their outputs. Attackers exploit this sensitivity by altering inputs or corrupting datasets used to train the model.

Common attacks include adversarial inputs, data poisoning, model extraction, and prompt manipulation. Each technique interferes with how a model learns, classifies, or generates information.

Yes, attackers can influence model behavior by feeding crafted or misleading inputs during inference. These attacks succeed even when the underlying infrastructure remains secure.

AI analyzes behaviors, patterns, and anomalies to identify activity that would be hard for traditional tools to spot. This improves early detection and helps teams understand potential risks faster.

AI models may reveal training data if they are probed or deployed without proper privacy controls. Strong access restrictions and monitoring help prevent such leaks.

All AI systems benefit from core protections because even low-risk applications can be misused or manipulated. High-impact systems require stronger safeguards due to the severity of potential failures.

They should start by validating training data, reviewing model behavior, and limiting access to sensitive components. Continuous monitoring helps spot unexpected changes or signs of misuse early.

CloudSEK uses AI to monitor the external threat landscape and identify risks targeting your brand, data, and digital assets. Its platform analyzes signals across the internet to detect scams, impersonation attempts, data leaks, and vulnerabilities before they escalate into incidents.

CloudSEK provides organizations with early visibility into threats by combining machine learning, continuous monitoring, and automated alerts. This helps security teams act quickly, reduce exposure, and strengthen protection against external risks.

Real-Time Alerts: Sends timely notifications when CloudSEK’s AI detects emerging risks or suspicious patterns.