🚀 CloudSEK becomes first Indian origin cybersecurity company to receive investment from US state fund

Read more

Key Takeaways:

Penetration testing tools are software programs that simulate cyberattacks to uncover security weaknesses. Their purpose is to show where a system can be exploited before an attacker finds the same gap.

Each tool examines configurations, services, or application behavior to identify points of failure. The findings reveal vulnerabilities that require correction.

These tools act as verification instruments for security posture. They turn potential risks into confirmed issues that can be fixed with measurable impact.

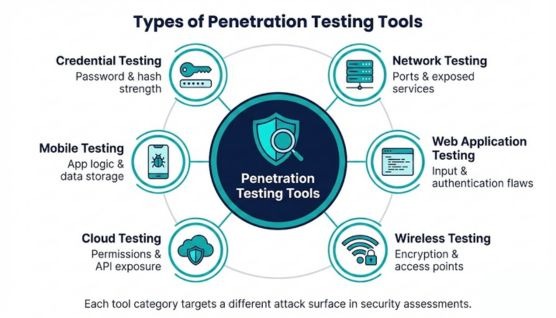

Penetration testing tools are grouped into categories based on the attack surface each category evaluates.

Network penetration testing tools analyze open ports, exposed services, and communication paths to uncover structural weaknesses. Findings often reveal outdated components, misconfigurations, and gaps in perimeter defenses.

Web application tools examine request handling, input validation, and authentication logic to detect exploitable flaws. Analysis commonly exposes injection risks, authorization failures, and insecure data processing behaviors.

Wireless testing tools inspect Wi-Fi protocols, encryption strength, and access point characteristics. Captured activity highlights weak credentials, flawed signal configurations, and unauthorized broadcast sources.

Cloud testing tools evaluate identity policies, storage permissions, network segmentation, and API exposure within cloud environments. Results identify misconfigurations that create unauthorized access paths or excessive privilege scenarios.

Mobile tools analyze data storage practices, API interactions, and application binaries. Evaluation uncovers insecure logic, sensitive data exposure, and vulnerabilities created through poor implementation controls.

Credential testing tools assess password strength, authentication resilience, and hashing robustness. Output frequently reveals predictable patterns, outdated encryption methods, and vulnerabilities to brute-force or dictionary attacks.

Tool selection focused on real-world penetration testing relevance rather than feature volume or marketing claims. Priority was given to tools that consistently appear in professional assessments across web, network, identity, wireless, and red team engagements.

Each tool was evaluated based on practical effectiveness, clarity of output, and its role within a complete testing workflow. Consideration included how well a tool supports discovery, validation, exploitation, or post-compromise analysis without unnecessary noise.

Maintenance status, community or vendor support, and adaptability to modern environments were also reviewed. Tools that enable clear proof, reproducible findings, and actionable remediation insight ranked higher than those producing purely theoretical results.

Metasploit Framework confirms whether discovered vulnerabilities can be exploited under real-world conditions. Security testers rely on it to replace theoretical severity with demonstrated technical impact.

Exploit modules, payload execution, and session handling operate inside a single coordinated workflow. Multi-stage attack validation remains possible without switching tools or losing context.

Professional penetration tests use Metasploit to demonstrate compromise paths that development teams can reproduce. The resulting evidence supports both remediation planning and executive-level risk discussions.

Metasploit Framework is chosen when assessments require defensible proof instead of vulnerability listings. Exploit validation supports impact-based risk prioritization.

Burp Suite Professional approaches web security from the perspective of live traffic rather than static analysis. Every request, parameter, and response can be intercepted and reshaped to observe how the application reacts under manipulation.

Automated scanning accelerates initial discovery, but Burp’s real strength appears during manual exploration of authorization logic and application state. Token handling, privilege boundaries, and workflow assumptions become visible once requests are replayed and modified deliberately.

In practice, Burp often reveals flaws that never appear in automated reports because they depend on sequence, role context, or subtle request differences. The resulting evidence aligns closely with developer debugging workflows, which speeds up remediation.

Burp Suite Professional is selected for scenarios where scanners alone cannot validate business logic risk. Manual request control reduces false confidence in automated results.

Nmap identifies live hosts, exposed ports, and listening services across network ranges. Accurate reconnaissance defines which attack paths warrant further investigation.

Service fingerprinting reveals protocol behavior and application versions running behind exposed ports. Correct identification prevents wasted effort during vulnerability scanning and exploitation.

Penetration testers use Nmap output to prioritize targets for deeper assessment. Clean reconnaissance data improves efficiency across the entire engagement.

Nmap is selected at the start of engagements to avoid blind testing. Accurate exposure mapping prevents wasted effort later.

Nessus provides large-scale vulnerability detection across operating systems, applications, and network services. Coverage consistency makes it suitable for enterprise environments.

Authenticated scanning exposes misconfigurations and missing patches that external testing cannot detect. Internal security posture becomes measurable instead of inferred.

Security teams use Nessus reports to guide remediation across complex infrastructures. Findings frequently support audit, compliance, and governance workflows.

Nessus fits environments that require consistent vulnerability baselining across large asset inventories. Centralized reporting supports governance and audit needs.

Kali Linux delivers a dedicated operating system designed specifically for penetration testing and security research. Preconfigured toolchains eliminate setup overhead during assessments.

Network testing, web assessment, wireless analysis, and forensics operate from a single environment. Predictable tooling behavior improves reliability during live engagements.

Professional testers standardize on Kali Linux to maintain consistent workflows across teams. The platform supports both field testing and controlled lab environments.

Kali Linux reduces operational friction by standardizing tooling across teams. Consistent environments improve repeatability and collaboration.

OWASP ZAP approaches web security testing from an automation-first perspective while still allowing manual control when needed. The tool observes application behavior through a proxy layer, which makes vulnerability detection visible and repeatable.

Passive scanning surfaces issues without modifying traffic, which suits early testing and continuous integration environments. Active scanning then verifies whether those signals represent exploitable conditions rather than theoretical weaknesses.

ZAP often fits environments where security checks must run frequently without heavy operational overhead. Its outputs provide early warning signals that guide deeper manual investigation later.

OWASP ZAP is selected when open-source tooling must integrate into development workflows. Automation flexibility supports continuous testing without licensing constraints.

Acunetix focuses on automated dynamic testing for web applications at scale. The scanner prioritizes breadth and consistency across large application inventories.

Its crawler builds an internal map of application structure before launching targeted vulnerability tests. That mapping reduces blind spots that basic scanners often miss.

Acunetix frequently acts as a filtering layer that highlights where deeper manual testing should concentrate. The tool excels at reducing time spent on low-risk surfaces.

Acunetix suits organizations managing many web applications simultaneously. Automated coverage helps prioritize manual testing resources.

Intruder is designed around continuous visibility of internet-facing systems rather than periodic assessments. Cloud-based scanning keeps external exposure under constant observation.

The platform emphasizes prioritization so attention stays on vulnerabilities that materially affect attack surface risk. This approach avoids overwhelming users with low-impact findings.

Intruder often complements internal scanners by focusing exclusively on perimeter assets. Changes in exposure become easier to track over time.

Intruder is used to maintain awareness of internet-facing exposure between formal assessments. Continuous monitoring highlights risk drift.

SQLMap exists to validate one of the most damaging classes of application vulnerabilities: SQL injection. Automated payload generation allows direct interaction with backend databases once injection points are found.

Adaptive tamper logic adjusts attacks to bypass filters and defensive controls. This capability makes SQLMap effective even against partially protected applications.

SQLMap typically enters the workflow after discovery confirms injection potential. The resulting database access demonstrates impact with unmistakable clarity.

SQLMap is selected only after injection indicators appear credible. Controlled exploitation confirms real database risk.

Wireshark provides packet-level visibility into how systems communicate across a network. Captured traffic reveals protocol misuse, weak encryption, and unexpected data flows.

Stream reconstruction allows conversations to be examined in full context rather than as isolated packets. Subtle anomalies become easier to confirm through this lens.

Wireshark often acts as the verification layer during assessments, confirming whether suspected behavior truly occurs on the wire. Packet evidence adds credibility to technical findings.

Wireshark provides packet-level confirmation that removes ambiguity from findings. Network evidence strengthens technical conclusions.

Aircrack-ng focuses on evaluating the real security posture of Wi-Fi networks rather than theoretical wireless design. Packet capture and monitoring expose how access points and clients behave under active observation.

Handshake analysis reveals whether encryption strength depends more on protocol choice or password quality. Injection and replay techniques highlight weaknesses that appear only during live wireless interaction.

Wireless assessments often depend on Aircrack-ng to validate assumptions about WPA and client isolation. Findings typically influence access point configuration, credential policy, and physical security controls.

Aircrack-ng is chosen when wireless security assumptions require validation. Packet-based testing reveals practical exposure.

Hydra is designed for testing live authentication mechanisms across a wide range of network services. Parallel login attempts simulate realistic attack pressure against exposed authentication endpoints.

Protocol support allows testing beyond web forms, including services like SSH, FTP, and databases. Rate handling options make it possible to observe how lockout and throttling controls respond.

Credential testing with Hydra often exposes gaps in account protection rather than weak passwords alone. Results clarify whether defensive controls slow attacks or simply log failures.

Hydra supports realistic credential stress testing under controlled conditions. Results highlight weaknesses in authentication controls.

OpenVAS provides vulnerability scanning through openly inspectable detection logic rather than proprietary rules. Transparency makes scan behavior easier to understand and tune.

Feed-driven updates keep vulnerability checks aligned with current disclosures. Scheduled execution supports recurring assessments without manual effort.

Self-hosted environments frequently rely on OpenVAS to maintain scanning control while preserving depth. Results often form the baseline for broader risk evaluation.

OpenVAS suits organizations that require self-hosted scanning transparency. Operational control outweighs vendor dependency.

BeEF shifts focus away from servers and places the browser at the center of security testing. Client-side behavior becomes observable once a browser context is controlled.

Hooked sessions allow interaction with active users, scripts, and browser features in real time. Weaknesses in trust boundaries and session handling surface quickly.

BeEF commonly supports scenarios involving phishing, malicious scripts, or unsafe browser extensions. Demonstrations highlight how client-side compromise bypasses hardened infrastructure.

BeEF is selected to illustrate client-side exposure rather than server compromise. Browser behavior reveals trust weaknesses.

John the Ripper evaluates password strength through offline cracking of captured credential hashes. Offline analysis removes rate limits and monitoring from the equation.

Rule-based attacks reveal how predictable password patterns undermine policy requirements. Weak credentials surface quickly even under moderate attack conditions.

Password audits often rely on John to demonstrate practical cracking feasibility. Results provide direct evidence for improving credential policies and storage practices.

John the Ripper avoids production impact while measuring credential resilience. Offline analysis produces safer evidence.

Selecting a penetration testing tool in 2026 requires focus on capabilities that support modern attack surfaces and evolving security workflows.

Automation accelerates discovery by reducing manual effort during reconnaissance and exploitation steps. AI-driven analysis strengthens accuracy by interpreting complex patterns missed during manual reviews.

Pipeline integration enables continuous testing inside CI/CD and DevSecOps environments. Strong connectivity prevents vulnerable code from reaching production stages.

Coverage depth ensures evaluation of networks, applications, cloud resources, and identity systems with equal rigor. Broader assessment scope reduces blind spots across large infrastructures.

Usability factors influence how quickly teams execute tests and interpret findings. Clear layouts and intuitive workflows support fast action during high-pressure engagements.

Reporting support provides structured outputs for audits, remediation plans, and compliance requirements. Exportable formats help communicate findings to technical and managerial stakeholders.

Beginner-friendly penetration testing tools offer simple interfaces, predictable workflows, and minimal setup requirements.

OWASP ZAP provides a straightforward approach to web testing with automated scanning and clear navigation. Built-in guidance supports learning while maintaining practical testing depth.

Nmap introduces foundational network enumeration techniques through simple commands and readable outputs. Early familiarity with Nmap builds strong fundamentals for more advanced assessments.

Nikto performs quick web server checks without complex configuration steps. The tool highlights outdated components and basic misconfigurations in an easy-to-understand format.

Kali Linux delivers a curated suite of security tools inside a structured environment. Preinstalled resources allow beginners to experiment without manual setup or dependency management.

Penetration testing in 2026 demands tools that provide measurable proof of risk rather than theoretical alerts. A well-chosen toolkit gives security teams the clarity needed to confirm real exposure across web, network, identity, and cloud surfaces.

Effective tools stand out by producing consistent results, remaining well-maintained, and integrating smoothly into modern workflows. Precision, reliability, and actionable output matter far more than long feature lists.

Teams that invest in a balanced mix of automation, manual control, and post-compromise capability gain stronger situational awareness. That combination accelerates remediation, strengthens security posture, and supports more confident decision-making.

Penetration testing tools provide verified evidence of where systems can be compromised. Clear outputs guide remediation by showing exactly which weaknesses matter.

Automated scanners assist with broad discovery, but manual testing confirms actual exploitability. Both methods are needed for reliable results.

OWASP ZAP and Nmap offer simple workflows that help new testers build foundational skills. Both tools provide predictable output without steep learning curves.

Most tools focus on known weaknesses, but exploitation frameworks can help test unknown behavior patterns. Zero-day discovery still depends primarily on skilled manual analysis.

Wireless assessments typically rely on tools like Aircrack-ng to capture handshakes and analyze encryption strength. Packet-level evidence reveals practical exposure.

Cloud-compatible tools evaluate identity settings, exposed services, and misconfigured permissions. Findings help validate security posture across dynamic cloud workloads.

Cobalt Strike and similar platforms simulate adversary behavior after initial access is gained. These tools measure detection and response maturity under realistic conditions.

Tools with automation support, such as OWASP ZAP or Acunetix, integrate directly into development workflows. Pipeline scanning prevents vulnerable code from reaching production.

Continuous scanning helps maintain visibility, while full penetration tests run at defined intervals. Both approaches strengthen long-term security posture.

Metasploit requires familiarity with exploitation workflows and payload behavior. Skilled operators gain deeper value by validating real attack paths.