🚀 أصبحت CloudSek أول شركة للأمن السيبراني من أصل هندي تتلقى استثمارات منها ولاية أمريكية صندوق

اقرأ المزيد

Artificial intelligence plays a key role in threat detection by enabling systems to identify malicious activity based on behavior and patterns rather than relying only on known rules or patterns. This allows threats to be detected even when they are new, unknown, or designed to evade traditional security tools.

AI threat detection helps security teams handle large volumes of activity by automatically flagging unusual behavior and prioritizing higher-risk signals. As a result, detection becomes faster, more scalable, and less dependent on constant manual review.

In practical use, AI threat detection also supports visibility beyond internal systems. Platforms like CloudSEK help organizations apply AI threat detection by tracking external exposure and highlighting risks that need immediate attention.

AI threat detection is the use of artificial intelligence and machine learning to identify malicious activity within digital systems by analyzing security-related data. It determines whether activity is normal or suspicious without relying entirely on predefined rules or known attack signatures.

Unlike traditional rule-based security tools, AI threat detection focuses on behavior and context across users, devices, and systems. This allows threats to be identified even when no prior pattern or signature exists.

As a result, risks such as malware, phishing attempts, and insider misuse can be detected earlier and with greater accuracy. This improves visibility into emerging threats and supports faster detection decisions.

AI-based threat detection works automatically by monitoring security data across systems and comparing it to expected behavior. This analysis happens in real time and does not rely on manually defined rules.

As activity occurs, machine learning models evaluate patterns such as user access, system actions, and data movement. When behavior deviates from normal ranges, the system detects it as an anomaly and flags it as a potential threat.

Over time, the models improve by learning from new data and outcomes, reducing unnecessary alerts. This allows detection systems to adapt continuously as environments and attack methods change.

AI is important in modern threat detection because it enables security systems to identify, prioritize, and surface threats that are difficult to detect using traditional methods.

AI threat detection relies on a set of core concepts that explain how intelligent systems identify malicious activity beyond traditional rule-based methods. These concepts define how threats are recognized, evaluated, and surfaced.

Machine learning allows threat detection systems to learn patterns from security data instead of following manually defined rules. This enables detection logic to improve over time as new activity and outcomes are observed.

Rather than focusing on known attack signatures, behavior analysis looks at how users, devices, and applications normally operate. Deviations from expected behavior help reveal suspicious or malicious activity.

Anomaly detection identifies activity that falls outside normal behavioral patterns learned by the system. This concept is critical for detecting unknown threats and zero-day attacks that lack existing signatures.

Context awareness ensures that activity is evaluated based on factors such as user role, access level, timing, and historical behavior. This reduces incorrect detections by distinguishing risky behavior from legitimate actions.

Continuous learning allows detection models to adjust as environments and attack techniques change. By updating their understanding automatically, AI systems remain effective without constant manual tuning.

AI-based threat detection is applied differently depending on the nature of the threat. By learning how activity normally unfolds, it becomes possible to surface attacks that rely on subtle changes rather than obvious signatures.

Malicious software often behaves differently once it begins running inside a system, such as attempting to hide processes or modify critical settings. By learning how legitimate applications usually operate, AI-driven analysis can surface malware even when it has never been seen before.

Ransomware activity tends to stand out through sudden encryption behavior, unusual file access patterns, or rapid permission changes. Recognizing these actions early helps interrupt attacks before systems or data are fully locked.

Deceptive messages usually reveal themselves through inconsistencies in communication patterns, sender behavior, or how users interact with them. Analyzing these signals allows phishing attempts to be flagged even when they closely resemble legitimate messages.

Abuse of legitimate access becomes noticeable when user behavior changes unexpectedly, such as accessing data at unusual times or moving large volumes of information. Monitoring these shifts over time helps expose compromised or misused accounts without relying on predefined rules.

Previously unknown attacks rarely match established signatures but often disrupt normal activity patterns. Spotting these irregular behaviors allows new threats to be detected before they are formally identified.

AI models used in threat detection are created through a structured training process that prepares them to recognize malicious behavior before they are deployed in real environments. This process focuses on threat relevance, data quality, and continuous improvement rather than generic model building.

Model development begins by determining what types of threats the system needs to detect, such as malware, phishing, or insider activity. Clear objectives ensure the model learns patterns that are relevant to real security risks instead of generic system behavior.

Training relies on large volumes of security-related data collected from logs, network traffic, endpoints, and user activity. This data is cleaned and structured so the model can focus on meaningful signals that differentiate normal behavior from malicious actions.

Machine learning techniques are applied to help the model recognize patterns associated with both legitimate activity and known threats. Through repeated exposure to these patterns, the model learns how malicious behavior differs from normal system usage.

Before deployment, models are evaluated using unseen data to measure detection accuracy and error rates. This step ensures the model can perform reliably in real-world conditions without generating excessive false alerts.

After deployment, models are updated using new data and confirmed threat outcomes. This ongoing retraining allows detection capabilities to remain effective as attack techniques and environments evolve.

AI reduces false positives and alert fatigue by improving how detection systems evaluate, filter, and prioritize security signals before they reach human analysts.

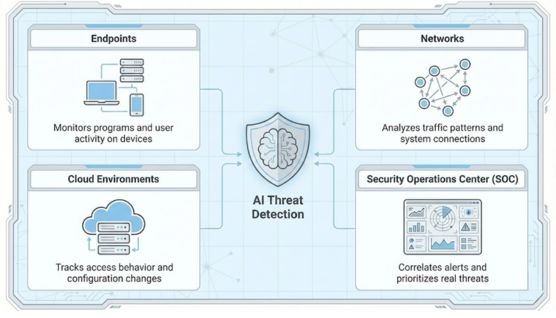

AI is used at different points within threat detection systems based on where security-relevant activity is generated and observed. Each placement focuses on a specific type of signal so suspicious behavior can be identified closer to its source.

On endpoints such as laptops, servers, and mobile devices, AI analyzes how programs run and how users interact with the system. This helps surface malicious activity, misuse, or unauthorized actions directly on the device where they occur.

Within networks, AI examines communication patterns between systems and monitors how data moves across connections. Unexpected connections or abnormal traffic behavior can indicate intrusions, lateral movement, or data leakage.

In cloud environments, AI observes application activity, access behavior, and configuration changes across shared infrastructure. This helps identify risks related to unauthorized access, compromised accounts, or unsafe configuration changes.

At the operations level, AI supports threat detection by organizing and prioritizing security signals. By correlating related activity and reducing noise, it helps analysts focus on incidents that are more likely to represent real threats.

AI-based threat detection improves the ability to identify and manage security threats at scale, but it also has practical limitations. Understanding both benefits and constraints is necessary to use AI effectively in threat detection.

Implementing AI-based threat detection works best when it is treated as an operational capability rather than a one-time setup. The focus should be on making detection useful, trustworthy, and aligned with how security teams actually work.

Start by deciding which threats matter most and where current detection falls short. This keeps AI focused on real risks instead of monitoring everything without purpose.

Detection accuracy depends on the quality of security data feeding the system. Incomplete or inconsistent data limits what AI can realistically identify.

Detection results should flow into existing monitoring and response processes. When alerts fit naturally into daily operations, they are more likely to be reviewed and acted on.

AI output still needs human judgment, especially early on. Reviewing detections helps teams understand results, adjust sensitivity, and build confidence in the system.

Introducing AI detection in stages makes issues easier to spot and correct. Gradual rollout reduces disruption and prevents sudden alert overload.

Threat detection is not static, and neither is AI performance. Regular review and tuning are necessary as environments and threat behavior change.

CloudSEK helps in AI threat detection by continuously monitoring an organization’s external digital footprint across the surface web, deep web, and dark web. Its AI analyzes large amounts of online data and delivers real-time, contextual intelligence through platforms like XVigil, helping teams focus on the most critical risks.

CloudSEK’s AI works by collecting and organizing data from many sources, such as social media, public websites, forums, and underground channels. Using machine learning and predictive analytics, it identifies patterns related to emerging threats, vulnerabilities, and threat actor behavior before attacks take place.

This intelligence supports areas like digital risk protection, attack surface management, data leak detection, and supply chain security. By prioritizing high-impact issues such as brand impersonation, exposed assets, and leaked data, CloudSEK reduces alert fatigue and enables faster action to protect business operations, reputation, and revenue.